Background

Iriss held the Spring into Evidence event on March 2nd 2020 at The Barracks Conference Centre in Stirling. The aim for the day was to raise awareness about support and information available for social services workers to find and use evidence in their practice. 47 practitioners and managers attended from public, third and independent sector organisations. The day's programme consisted of a mix of plenary presentations, activities and breakout workshops with inputs from Iriss, SSSC, Care Inspectorate and Audit Scotland. Some of the takeaways people reported from the day include:

- Refreshed knowledge

- More understanding of the importance of using evidence to improve services

- The value of different types of evidence

- Different approaches to evidence gathering

This report provides a summary of activities, key messages, links to follow-up resources and evaluation feedback. The event programme is attached below.

Summary of plenary presentations

Over the course of the day, speakers highlighted the importance of having a clear purpose and question(s) to answer in order to provide a structure and focus for an evidence search or data collection activity. Below are key messages from each speaker, with embedded links to more information and contact details.

Iriss Evidence Search and Summary Service (ESSS) and the evidence context: Robert Sanders – Iriss Information Specialist

Robert opened the presentations and set out a definition of what we mean by evidence along with framing questions about ‘good’ evidence before introducing the Iriss ESS Service.

Evidence comes in many forms

- Published research, things like journals, academic papers and books, research findings

- People’s lived experiences. These can come from interviews, surveys and questionnaires, documentaries, case studies, books, even social media interactions.

- Audit and evaluation data (both quantitative and qualitative)

- Grey literature, like research reports, PhD’s, conference proceedings, white papers, government department reports, reports from business and industry

- Knowledge and experience from practice, from colleagues and peers. This is a form of evidence, insights that people develop over years of practice is a valuable resource.

‘Good evidence’

The concept of ideal evidence comes from discussions and debate around the value of different research methods and designs and how these are viewed. There is a great deal of nuance and variation in social work and social care, with many people involved across a number of different settings, so it is important to consider:

- Randomised control trials or systematic reviews are often seen as the gold standard but there is more to evidence than this, particularly if we need to understand in more detail why something did or didn’t work, or how it works in more complicated real world settings.

- Decisions in health and social care are complex and should draw on different sources of information

- The Iriss perspective is that research sits alongside the experiences of people who use or are supported by services, practice knowledge and practitioners’ experience.

ESSS

The Iriss Evidence Search and Summary Service is a free service for anyone (with a few exceptions) working in social services in Scotland. We cover any topic related to social services, everything from arts to housing to criminal justice to digital inclusion. Depending on your needs and what research is out there we can summarise or review the main themes to give you an idea of the different perspectives, approaches or methods. We can provide a reading list or breakdown of key resources, or produce a more in-depth review.

- Is there a particular problem you want a solution for?

- Is there a problem you have a solution for and you want to know if it will work?

- Is there a problem with more than one solution and you want to know which one is most effective?

- Are there areas out there you’ve noticed where there are gaps in research and evidence?

Care Inspectorate: Evidence for improvement. Aidan McCrory, Inspection Advisor

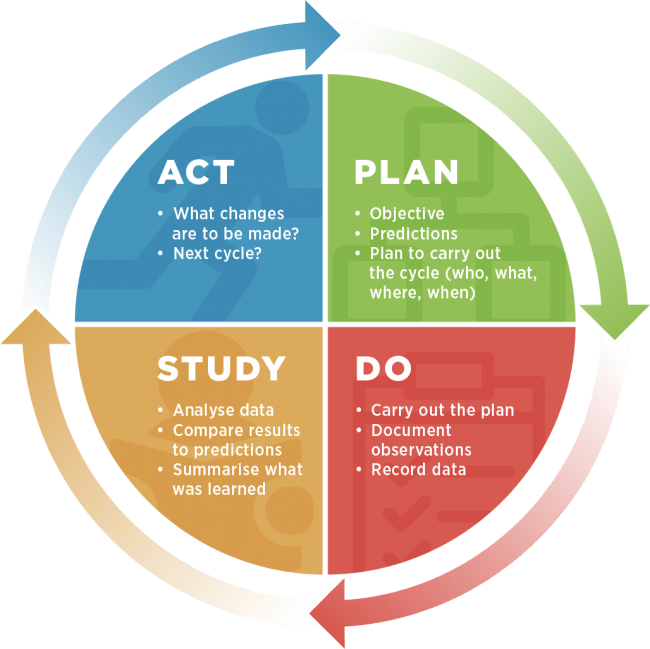

Aidan asked the group to reflect on whether their service collects data for improvement, or for compliance alone? Focussing on improvement, he talked through the Plan Do Study Act (PDSA) methodology and the role of evidence for improving services. The PDSA method has two stages, firstly, thinking and answering these questions:

- What are we trying to accomplish?

- How will we know that change is an improvement?

- What change can we make that will result in improvement?

Secondly, once you have answers to these three questions, move through a PDSA cycle:

- Plan: Make predictions about what will happen

Plan to carry out the cycle — who, where, what and when? - Do: Carry out the plan

Document observations

Record data - Study: Analyse data

Compare results to your predictions

Summarise - Act: What changes need to be made?

Start a new PDSA cycle?

Overall, it is crucial to establish a shared understanding within a team or service of their purpose for producing, collecting and analysing evidence before starting a PDSA cycle. Without this, interventions can be harder to implement, measure and analyse to see if they are making a positive change. Aidan also explored the question ‘how do we know that change is an improvement?, following on with a practical example of planning, collecting and analysing data in the case of measuring falls reduction. Aidan’s slides, with more detail of this example, are attached below. For more information about the improvement model and PDSA, see the Care Inspectorate online resource Starting your improvement journey. This includes a link to a downloadable PDSA template.

Audit Scotland: perspectives on evidence. Jillian Matthew, Senior Manager, Performance Audit & Best Value

Jillian introduced the remit of Audit Scotland and gave an overview of some of the thematic areas of recent audits, as well as forthcoming work that will provide a source of evidence relevant for social services. These include Health and Social Care Integration, Community Empowerment, planning for national outcomes, and Early Learning and Childcare.

The role of Audit Scotland

Audit Scotland’s aim is to help ensure that public money is spent well and provides value for money for the people of Scotland. Audits are undertaken independently of the Government and on behalf of two key representatives who are responsible for public audit in Scotland:

- The Auditor General for Scotland (AGS) – whose remit includes health boards, colleges and central government bodies such as Police Scotland, as well as the Scottish Government itself.

- The Accounts Commission – responsible for the audit of local government

Themes emerging from audits

Common themes across audits on public services include:

- Sustainability of services – financial pressures, demographic change, increasing demand, increasingly complex needs

- Public service reform – complex governance arrangements, integrated working, shared resources, partnership working, community planning

- Legislative change – and the impacts of this on financial pressures and demands on management and staff

- Staffing issues – recruitment difficulties, pressures in some areas (geographic/ roles)

- Problems with availability and reliability of data for monitoring and evaluation

- Slow progress with transformation, new models of service delivery, digitisation and outcomes-focused approaches

Forthcoming work

A report into Social Care sustainability is planned for 2021 work is (pre-Covid 19) intended to begin in Summer 2020. Scope for this is still in development, but an intended focus will be around the following areas: service models; outcomes for people; spending on social care; identifying key pressures and risks; medium to long term planning strategies. Citizen-focused audit approaches are also being considered.

- Audit Scotland perspectives on evidence slides (also attached below)

Workshop summaries

The event featured two rounds of breakout workshops that participants could elect to attend. Summaries from each workshop follow below.

Using the SSSC Supervision Learning Resource to improve supervision experiences (Lorna Dalton, Learning Advisor, SSSC)

This workshop introduced the SSSC Supervision Learning Resource, with facilitated discussion around the purpose of supervision, practitioner experiences of supervising or being supervised, and resources that provide evidence of what good supervision looks like. Key messages from group discussions:

- Experiences of supervision continue to be mixed. Most practitioners shared how they felt supported through the process and that their supervision sessions were part of a wider package of care. For them, the balance between support and surveillance was right. For a smaller group of people, their supervision could still either be non-existent or severely lacking.

- People agreed that it was useful to ask themselves, and those they work with, why they do supervision in the first place. Some who attended the workshops had already been doing this, and were using that thinking to inform them in the future of how supervision could be conducted and in what formats.

- When people shared personal experiences, there was praise of managers who had been particularly good at supervision, ones who let the supervisee lead and direct the discussion. As some practitioners became managers themselves, this helped them shape how they behaved and ran their own sessions.

- The resources on the SSSC and Iriss pages were very welcome, and people were keen to use some of them to take back to their own teams (and had already done so in some cases).

Associated resources:

- SSSC Supervision Learning resource workshop slides (also attached below)

- SSSC Supervision Learning resource

- Iriss Insight 30, Achieving effective supervision by Martin Kettle

- Iriss case studies: Leading change in supervision — messages from practice

Introduction to the Navigating Evidence tool (Josie Vallely, Project Manager, Iriss)

The workshops served to introduce people to the new Navigating Evidence resource, and encourage them to reflect on their own use of evidence in practice.

The tool is designed for use by Newly Qualified Social Workers in their first year of practice, however it is applicable across all stages of practice as a tool for self-reflection and to support others in practice teaching and supervision.

In groups we reflected on the different places we find evidence. There were the most obvious candidates: journals, policy, peers and formal training opportunities. We also unearthed some interesting examples that may not be immediately obvious, such as complaints procedures and Twitter feeds.

Using these examples we went onto work through the Comfort Zone tool. This tool encourages people to explore what forms of evidence they are most comfortable using and how they could expand this comfort zone to include other forms of evidence. Interesting discussion followed around the way different people access evidence and the ways in which people's learning styles influenced their choice of evidence. We also acknowledged other challenges in using evidence, such as the scale of evidence out there. It can feel overwhelming to access evidence when the internet allows us to find so many resources.

We also explored the Intuition Iceberg tool. Often in conversations about evidence we can see a dichotomy created between intuition or ‘gut feeling’ and ‘real evidence’. This tool asks us to reflect on this and take time to explore the layers of knowledge within gut feelings and what evidence they are based on.

It was a short 45-minute taster session. General feedback suggests that it was well received and that practitioners can see plenty of scope for using the tool in their practice and in supporting others.

Building evidence confidence (Andreea Bocioaga, Information Specialist, Iriss)

This workshop aimed to encourage people to uncover some of our underlying assumptions that we hold when we think about evidence. Discussion focused on surfacing and recognising personal biases about evidence, e.g.identifying our preferred sources or forms of evidence, and why that is, along with judging what counts as ‘trustworthy evidence’. The questions below can help you make a judgement about the reliability of evidence you encounter.

|

Credibility |

Are you convinced the evidence reflects what is happening or is just one side of the story? |

|---|---|

|

Transferability |

Are you convinced that the claims the evidence makes are transferable to other groups or settings? If not, what does that mean for how you apply it to your setting? |

|

Reliability |

Does it tell enough information to recreate this study or intervention if you wanted to do so? e.g. enough details about the steps taken to collect and analyse the data, who was involved and how? |

|

Acknowledgement of limitations |

Does the author acknowledge or reflect on their own biases and limitations of the research or evaluation? |

Take a look at the Evidence confidence slides to find out more background about this workshop and additional questions to prompt reflection on your approach to evidence. These will work well for individual use or as a structure for a team discussion or supervision.

Evidence snakes and ladders — what helps us and holds us back?

This table activity identified our evidence snakes (the things that hold us back with finding and using evidence effectively) and ladders (what helps us). A summary of what was discussed and a range of links to resources and guidance can be found in the Summary report of evidence snakes and ladders activity (attached at the bottom of this page). In this Coggle Evidence snakes and ladders diagram snakes are marked with ❌ and ladders with ✅. You can use the themes and key points in the visual as prompts for discussions in your service to understand more about how your team find, gather and use evidence.

Question Wall

A number of questions were posted on the Question Wall over the course of the event. We have incorporated answers into this report at relevant points. Links to information and resources about support for gathering, using and assessing the quality of evidence is available in the Snakes and Ladders activity summary report and in the Evidence confidence slides.

Event evaluation

Feedback on the event showed that overall people found it useful for learning about resources and approaches to using evidence in practice.

We asked participants to rate their confidence with finding and using evidence for their work at the start and end of the event. The question was:

How confident do you feel about finding and using evidence for your work (at the start/ end of today)?

- Not confident

- Quite confident

- Confident

- Very confident

49% of respondents had rated themselves as either ‘quite confident’, ‘confident’ or ‘very confident’ with finding and using evidence at the start of the day and did not change their self-rating. 46% of respondents marked an increased level of confidence of one point on the scale, progressing from a starting rating of ‘not confident’ or ‘quite confident’. The remaining 5% of respondents marked an increase in their confidence level of two points on the scale, all of whom moving from ‘not confident’ to ‘confident’.

95% of respondents rated the venue and facilities as ‘Very Good’ or ‘Good’.

At the close of the day we also asked people to reflect on three questions, with responses summarised below:

What has most inspired you about evidence today?

- Refreshed knowledge and reinforced importance of using evidence to improve services

- The value of different types of evidence

- The importance of asking the right questions and measuring the important stuff

- Different approaches to evidence gathering

- SSSC supervision resource and improvement methodology from Care Inspectorate very helpful

What will you do differently or next as a result?

- Reflect and make time to discuss with colleagues

- Make use of online resources

- Review supervision regularly and connect supervision with practice

- Find a complementary mix of evidence methodology

- Consider and be mindful of my evidence biases

- Think about my purpose for gathering evidence, involve people on frontline

- Thinking about ‘is it the right evidence’?

- Interpretation of evidence — am I applying it properly?

- Possibility of using diverse sources of evidence and recognise limitations of certain types of evidence

What further support would help promote the use of evidence in your service?

- Forums for sharing evidence and best practice

- Connections/partnership working: a joined up approach to information sharing

- Something that gives me an overview or knowledge of what is out there

- Case studies and concrete examples of using evidence.

- Consistency of evidence demands from LAs, Scot Gov, regulators etc

- Training info — measurable outcomes in dementia

- Access to academic reports and journals as part of my professional registration fee

We take on board feedback that the schedule for the day packed in a range of inputs and a number of people would have benefited from more time for full group discussion, in particular around the themes arising from the Snakes and Ladders activity and the question wall. We have integrated responses to the question wall through the event report as far as possible. Seeing more practical examples of how organisations use evidence in practice was also something participants would have liked more on and we recognise that for those participants who were already confident in using evidence the event was pitched at a more general level than they needed. We will consider these points for future events.